MIT scientists trick Google AI into misidentifying a cat as guacamole

Scientists at MIT's LabSix, an artificial intelligence research group, tricked Google's image-recognition AI called InceptionV3 into thinking that a baseball was an espresso, a 3D-printed turtle was a firearm, and a cat was guacamole.

The experiment might seem outlandish initially, but the results demonstrate why relying on machines to identify objects in the real world could be problematic. For example, the cameras on self-driving cars use similar technology to identify pedestrians while in motion and in all sorts of weather conditions. If an image of a stop sign was blurred (or altered), an AI program controlling a vehicle could theoretically misidentify it, leading to terrible outcomes.

The results of the study, which were published online today, show that AI programs are susceptible to misidentifying objects in the real-world that are slightly distorted, whether manipulated intentionally or not.

SEE ALSO:After getting shade from a robot, Elon Musk fires backAI scientists call these manipulated objects or images, such as turtle with a textured surface that might mimic the surface of a rifle, "adversarial examples."

"Our work demonstrates that adversarial examples are a significantly larger problem in real world systems than previously thought," the scientists wrote in the published research.

The example of the 3D-printed turtle below proves their point. In the first experiment, the team presents a typical turtle to Google's AI program, and it correctly classifies it as a turtle. Then, the researchers modify the texture on the shell in minute ways — almost imperceptible to the human eye — which makes the machine identify the turtle as a rifle.

The striking observation in LabSix's study is that the manipulated or "perturbed" turtle was misclassified at most angles, even when they flipped the turtle over.

To create this nuanced design trickery, the MIT researchers used their own program specifically designed to create "adversarial" images. This program simulated real-world situations like blurred or rotating objects that an AI program could likely experience in the real-world — perhaps like the input an AI might get from cameras on fast-moving self-driving cars.

With the seemingly incessant progression of AI technologies and their application in our lives (cars, image generation, self-taught programs), it's important that some researchers are attempting to fool our advanced AI programs; doing so exposes their weaknesses.

After all, you wouldn't want a camera on your autonomous vehicle to mistake a stop sign for a person — or a cat for guacamole.

Featured Video For You

Walmart is testing self-scanning robots

(责任编辑:产品中心)

-

'Terminator Zero' creators find fresh life in sci

In the 40 years since a low-budget action film from a relatively unknown filmmaker and crew blasted

...[详细]

In the 40 years since a low-budget action film from a relatively unknown filmmaker and crew blasted

...[详细]

-

中国山东网青岛10月17日讯(记者 杨广科 通讯员 杨发鹏 谭晓鹏 曲刚) 在党的十九大即将召开之际,一场特别的展览在平度市广州路小学举行,该校美术老师郑峰雷《五十六个民族大团结》36米巨幅剪纸深深吸

...[详细]

中国山东网青岛10月17日讯(记者 杨广科 通讯员 杨发鹏 谭晓鹏 曲刚) 在党的十九大即将召开之际,一场特别的展览在平度市广州路小学举行,该校美术老师郑峰雷《五十六个民族大团结》36米巨幅剪纸深深吸

...[详细]

-

中国山东网青岛8月27日讯(记者 刘淑红) 8月26日下午,为总结新媒体统战工作取得的阶段性成效,继续深化新媒体统战工作创新发展,市南区新媒体联谊组织“同心慧”举行两周年恳谈会

...[详细]

中国山东网青岛8月27日讯(记者 刘淑红) 8月26日下午,为总结新媒体统战工作取得的阶段性成效,继续深化新媒体统战工作创新发展,市南区新媒体联谊组织“同心慧”举行两周年恳谈会

...[详细]

-

21日,记者从济青高速铁路有限公司获悉,济青高铁青岛机场站目前已具备开通运营条件,计划于8月12日前正式开通运营,届时从济南可直达胶东机场,济南市民乘坐部分国际航班也将更加方便。7月19日-20日,山

...[详细]

21日,记者从济青高速铁路有限公司获悉,济青高铁青岛机场站目前已具备开通运营条件,计划于8月12日前正式开通运营,届时从济南可直达胶东机场,济南市民乘坐部分国际航班也将更加方便。7月19日-20日,山

...[详细]

-

Pakistan Cricket at crossroads after shock defeat at Pindi

KARACHI:Bangladesh cricket team's stunning ten-wicket Test victory at Rawalpindi on Sunday yet a

...[详细]

KARACHI:Bangladesh cricket team's stunning ten-wicket Test victory at Rawalpindi on Sunday yet a

...[详细]

-

青岛私募基金再度交出靓丽月报,来自中国基金业协会的最新数据显示,截至7月底,青岛已备案私募基金数量达1340只,较上月增加77只,同比增长达93.92%。私募基金管理人数量、管理基金数量以及管理基金规

...[详细]

青岛私募基金再度交出靓丽月报,来自中国基金业协会的最新数据显示,截至7月底,青岛已备案私募基金数量达1340只,较上月增加77只,同比增长达93.92%。私募基金管理人数量、管理基金数量以及管理基金规

...[详细]

-

近日,省农业农村厅印发了《四川省“十四五”农业农村信息化发展推进方案》,为发展数字化生产力,推动现代信息技术与农业农村各领域、各环节深度融合提供了行动指南。我市也加紧落实以数字乡村建设赋能乡村振兴,用

...[详细]

近日,省农业农村厅印发了《四川省“十四五”农业农村信息化发展推进方案》,为发展数字化生产力,推动现代信息技术与农业农村各领域、各环节深度融合提供了行动指南。我市也加紧落实以数字乡村建设赋能乡村振兴,用

...[详细]

-

本报讯日前,记者从雨城区农业农村局获悉,雨城区以开展农村人居环境整治提升“百日攻坚”行动集中整治为契机,把“干部带头、党员示范、群众参与”作为切入点,打出“加减乘除”组合拳,全面落实“百日攻坚”行动各

...[详细]

本报讯日前,记者从雨城区农业农村局获悉,雨城区以开展农村人居环境整治提升“百日攻坚”行动集中整治为契机,把“干部带头、党员示范、群众参与”作为切入点,打出“加减乘除”组合拳,全面落实“百日攻坚”行动各

...[详细]

-

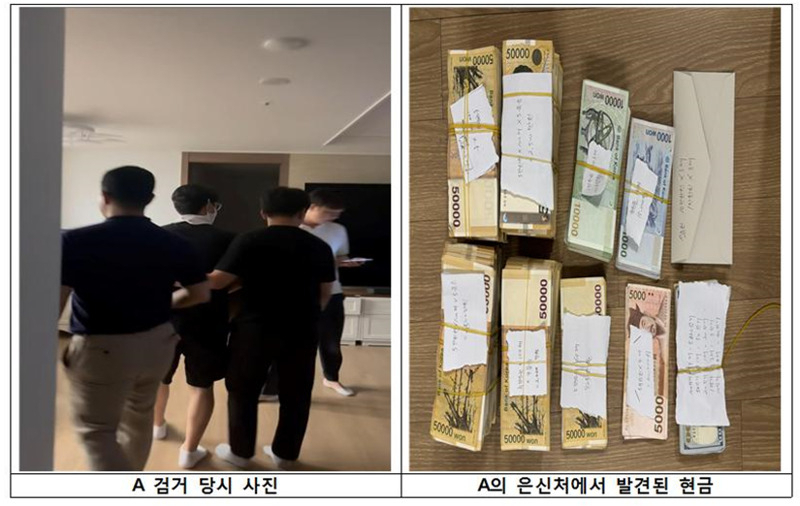

Police bust crypto scammer who received plastic surgery to evade arrest

Photo of a man in his 40s at the time of his arrest (left) and cash found at at his hideout. (Seoul

...[详细]

Photo of a man in his 40s at the time of his arrest (left) and cash found at at his hideout. (Seoul

...[详细]

-

銆€銆€铏界劧鍐皬鍒氱殑銆婅姵鍗庛€嬨€侀倱瓒呯殑銆婂績鐞嗙姜涔嬪煄甯備箣鍏夈€嬩复闃垫挙妗o紝浣嗕粖骞村浗搴嗘。绔炰簤杩樻槸寮傚父婵€鐑堬紝鎴愰緳銆佸垬寰峰崕銆佺攧瀛愪腹绛夌數褰卞ぇ鍜栵紝浠ュ強寮

...[详细]

銆€銆€铏界劧鍐皬鍒氱殑銆婅姵鍗庛€嬨€侀倱瓒呯殑銆婂績鐞嗙姜涔嬪煄甯備箣鍏夈€嬩复闃垫挙妗o紝浣嗕粖骞村浗搴嗘。绔炰簤杩樻槸寮傚父婵€鐑堬紝鎴愰緳銆佸垬寰峰崕銆佺攧瀛愪腹绛夌數褰卞ぇ鍜栵紝浠ュ強寮

...[详细]

平安人寿青岛分公司:举办“新青岛 新征程 新辉煌”区部课经理大会

平安人寿青岛分公司:举办“新青岛 新征程 新辉煌”区部课经理大会 《胡杨的夏天》首映礼再到青岛 观众怀念“朱陈CP”感动落泪

《胡杨的夏天》首映礼再到青岛 观众怀念“朱陈CP”感动落泪 最会做菜的佛山,拥有最可口的年味丨广东年货大摸底佛山篇

最会做菜的佛山,拥有最可口的年味丨广东年货大摸底佛山篇